Kubernetes Networking provides a flexible networking model that helps containers, pods and services communicate seamlessly within a container. In the fast-paced world of containerization and distributed systems, Kubernetes has emerged as a powerful tool for orchestrating and managing applications. However, harnessing the full potential of Kubernetes requires a solid understanding of its networking capabilities. In this article, we delve into the realm of Kubernetes networking, unraveling its fundamentals and shedding light on the key components that make it all possible.

Table Of Content

Introduction to Kubernetes Networking

Kubernetes Networking Components

Network Namespaces and Container Networking Interfaces (CNIs)

Conclusion

Introduction to Kubernetes Networking

Kubernetes networking establishes the groundwork for efficient and reliable operations in containerized environments. It allows for the smooth flow of data and communication between various components of your applications. By understanding the basics of Kubernetes networking, you can unlock the full potential of your distributed systems.

Robust networking is of utmost importance in containerized environments. As Kubernetes provides dynamic scaling, horizontal pod autoscaling, and rolling updates, a reliable networking infrastructure becomes essential for uninterrupted operations. It allows your applications to adapt to changing demands, effectively scale across nodes, and handle traffic efficiently. By prioritizing robust networking, you can ensure the optimal performance and scalability of your containerized applications.

Kubernetes Networking Components

In Kubernetes, networking components play a vital role in enabling communication between various application components. Let's explore three essential networking components: pods, services, and ingress.

Pods: Pods are the fundamental units of deployment in Kubernetes, encapsulating one or more containers. They are assigned unique IP addresses and are the primary entities responsible for executing application logic. Pods within a cluster can communicate with each other over the network.

To facilitate communication, Kubernetes assigns an IP address to each pod. This IP address is routable within the cluster, allowing pods to connect using standard networking protocols. The IP address is typically assigned from the cluster's Pod CIDR range, ensuring uniqueness and avoiding conflicts.

Additionally, pods leverage network namespaces and container networking interfaces (CNIs) for networking capabilities. Network namespaces provide isolation and separation for network resources between pods. CNIs, on the other hand, are pluggable network plugins that handle the networking configuration and management within each pod.

Here's an example of a Kubernetes YAML manifest defining a pod:

apiVersion: v1 kind: Pod metadata: name: nginx-pod spec: containers: - name: nginx-container image: nginx:latest ports: - containerPort: 8080Now you create the pod by running the command on your terminal.

kubectl apply -f nginx-pod.yaml

After creating this pod, you can access the NGINX server by using the pod's IP address and the specified port, 8080. Other pods or services within the cluster can communicate with this NGINX pod by addressing it using its IP address and port.To view the IP address assigned to this pod in Kubernetes, let's run this kubectl below on the terminal .

kubectl get pod my-pod -o jsonpath='{.status.podIP}'Services: Services in Kubernetes provide a stable endpoint for accessing pods, enabling load balancing and service discovery. They abstract the underlying pod IP addresses and provide a consistent interface for clients to interact with.

Different service types (ClusterIP, NodePort, LoadBalancer) and their use cases:

ClusterIP: This is the default service type. It exposes the service on an internal IP within the cluster. Other pods within the cluster can access the service using the ClusterIP. ClusterIP services are typically used for intra-cluster communication when you want to expose a service internally.

apiVersion: v1 kind: Service metadata: name: my-clusterIP-service spec: selector: app: my-app ports: - protocol: TCP port: 8080 targetPort: 8080 type: ClusterIPNodePort: This service type exposes the service on a specific port on each node in the cluster. It allows external access to the service by targeting any node's IP address along with the specified port. NodePort services are commonly used during development and testing scenarios.

apiVersion: v1 kind: Service metadata: name: my-NodePort-service spec: selector: app: my-app ports: - protocol: TCP port: 8080 targetPort: 8080 type: NodePortLoadBalancer: This service type utilizes an external load balancer provided by the cloud provider to expose the service externally. The load balancer distributes the incoming traffic among the service endpoints, ensuring high availability and scalability. LoadBalancer services are commonly used in production environments.

apiVersion: v1 kind: Service metadata: name: my-LoadBalancer-service spec: selector: app: my-app ports: - protocol: TCP port: 8080 targetPort: 8080 type: LoadBalancer

Service discovery mechanisms for seamless communication between services:

In Kubernetes, service discovery is a critical component for enabling seamless communication between services. It allows services to locate and connect with each other without hardcoding specific IP addresses or endpoints. The specific mechanisms used may vary depending on the type of service. Kubernetes offers two primary service discovery mechanisms:

DNS-Based Service Discovery: It is a fundamental mechanism in Kubernetes that allows services to be discovered using DNS names. Each service created in Kubernetes is assigned a DNS name in the form

<service-name>.<namespace>.svc.cluster.local. This DNS name can be used by other components, such as pods or services, to resolve and connect to the service.DNS-based service discovery works consistently across different service types, including ClusterIP, NodePort, and LoadBalancer. It provides a standardized way of accessing services within the cluster.

Environment Variables: Kubernetes automatically injects environment variables into containers running in pods. These environment variables provide information about the services running in the cluster, including their IP addresses and ports. By using these environment variables, applications within the pods can easily discover and connect to the services.

The environment variables specific to a service are automatically set based on the service's name. The variables typically follow the format

<SERVICE_NAME>_HOSTand<SERVICE_NAME>_PORT, where<SERVICE_NAME>is the uppercase version of the service name.For example, if you have a service named

my-service, Kubernetes sets environment variables such asMY_SERVICE_HOSTandMY_SERVICE_PORTin the pods. These variables contain the IP address and port of themy-service, allowing the application within the pod to establish communication with the service.

It's important to note that the specific implementation and configuration details may vary based on the service type and the Kubernetes platform being used. However, the underlying concepts of service discovery mechanisms remain consistent across service types.

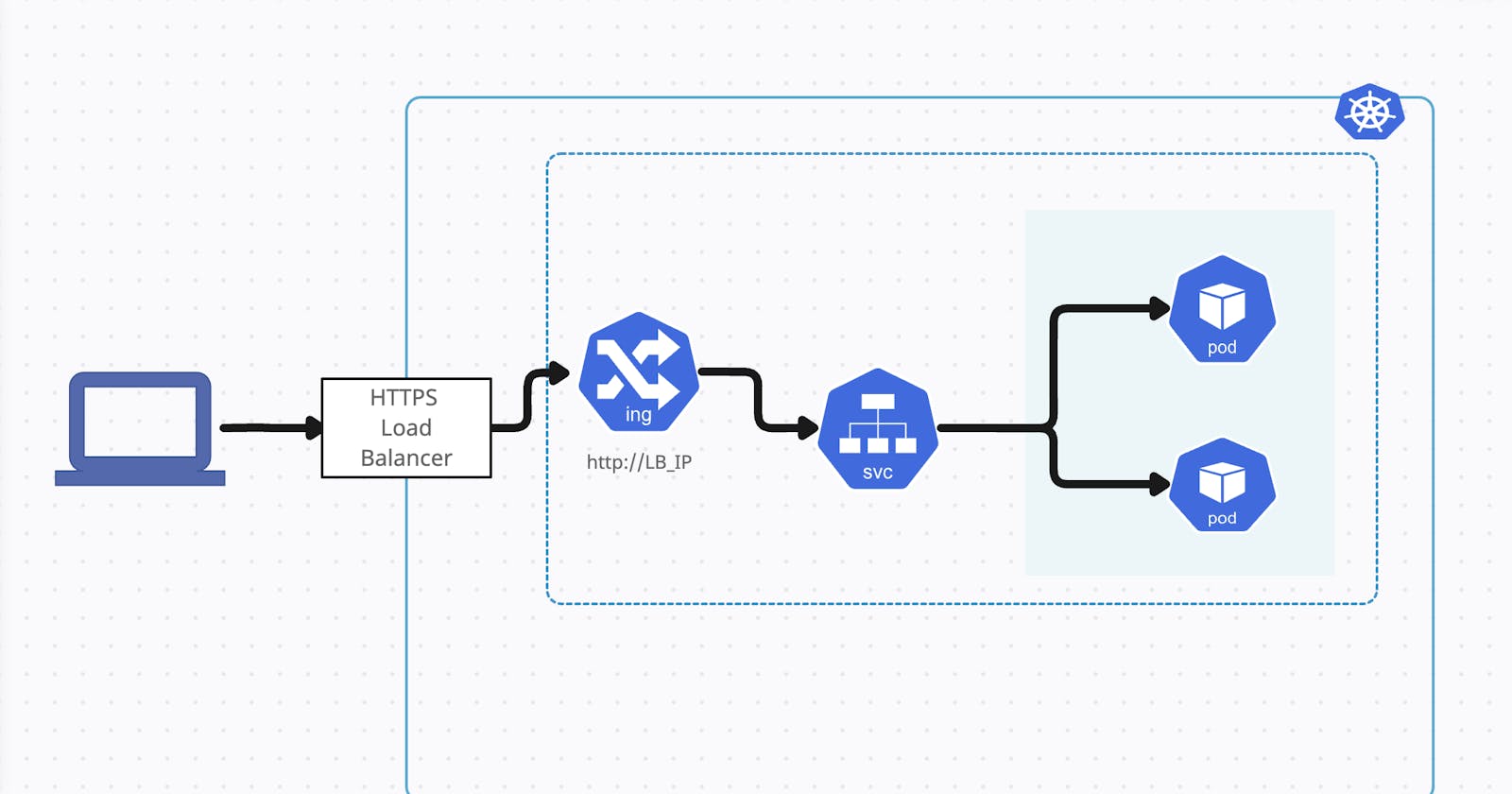

Ingress: Managing external access to services: Ingress in Kubernetes acts as an entry point for external traffic to reach services within the cluster. It provides a powerful and flexible way to manage inbound connections, SSL termination, and routing based on URL paths or hostnames. To enable Ingress functionality, an Ingress controller is required. The controller watches for Ingress resources and manages the ingress rules and traffic routing accordingly. Examples of popular Ingress controllers include Nginx Ingress Controller, Traefik, and Istio Gateway. By configuring rules in the Ingress resource, you can define how incoming requests should be forwarded to the appropriate services based on URL paths or hostnames. Ingress provides an elegant way to manage external access to services without exposing them directly to the outside world.

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: my-ingress spec: rules: - host: mydomain.com http: paths: - path: /app pathType: Prefix backend: service: name: my-loadBalancer-service port: number: 8080In the example above, the Ingress resource defines a rule where requests coming to

mydomain.com/appwill be forwarded to themy-loadbalancer-serviceservice on port8080.The Ingress controller, running in the cluster, continuously monitors Ingress resources and configures the underlying load balancer or reverse proxy to route external traffic to the appropriate services based on the defined rules.

Network Namespaces and Container Networking Interfaces (CNIs).

In Kubernetes, network namespaces are a key concept that allows for isolation and segregation of network resources. A network namespace provides an isolated network stack, including network interfaces, routing tables, and firewall rules. Each pod in Kubernetes has its own network namespace, enabling network isolation between pods.

The significance of network namespaces in Kubernetes networking lies in their ability to ensure that each pod has its own unique network environment. This isolation prevents conflicts and provides security by separating the network traffic of different pods.

apiVersion: v1

kind: Pod

metadata:

name: my-nginx-pod-1

spec:

containers:

- name: nginx-container

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Pod

metadata:

name: my-nginx-pod-2

spec:

containers:

- name: nginx-container

image: nginx

ports:

- containerPort: 80

In this example, we have two pods (my-nginx-pod-1 and my-nginx-pod-2), both running Nginx as the container image. By deploying these pods, you can observe that they can run on the same Kubernetes cluster without interfering with each other's network connectivity.Each pod will have its own IP address, routing table, and network stack, ensuring network isolation and security

The network namespaces ensure that the network resources, such as network interfaces, routing tables, and firewall rules, are separate for each pod. This isolation prevents conflicts and allows each pod to have its unique network environment.

The use of network namespaces in Kubernetes networking is crucial for creating a secure and isolated environment for pods to operate, enabling efficient communication while preventing interference and conflicts between different pods.

- Exploring the role of CNIs as pluggable network plugins for Kubernetes :

CNIs (Container Networking Interfaces) are pluggable network plugins in Kubernetes that are responsible for configuring network connectivity for pods and providing networking functionality within a cluster. CNIs interface with the underlying network infrastructure to establish networking capabilities for pods.

The role of CNIs is to handle tasks such as assigning IP addresses to pods, managing routing, enabling inter-pod communication, and implementing network policies. They abstract the complexities of networking and provide a consistent interface for managing and configuring networking aspects in a Kubernetes cluster.

- Overview of popular CNIs and their integration with the Kubernetes ecosystem:

Several popular CNIs are widely used in the Kubernetes ecosystem. Here are a few examples:

Calico: Calico is an open-source CNI plugin that provides network policy enforcement and enables secure network connectivity between pods. It leverages the standard Linux networking components, such as network namespaces, routing tables, and iptables, to provide a robust networking solution.

Flannel: Flannel is another popular CNI plugin that focuses on providing a simple and lightweight networking solution for Kubernetes. It utilizes an overlay network to establish communication between pods and supports various backend options, including VXLAN and host-gw.

Cilium: Cilium is a CNI plugin that combines networking and security functionality using eBPF (extended Berkeley Packet Filter) technology. It offers advanced networking features like load balancing, network visibility, and fine-grained network policies.

These CNIs integrate with Kubernetes through the CNI specification, which defines the interactions between Kubernetes and the network plugins. Kubernetes allows administrators to select and deploy the desired CNI plugin based on their specific networking requirements.

Conclusion

Kubernetes networking is vital for containerized environments, enabling communication between components. Pods, services, and ingress facilitate seamless interactions. Network namespaces and CNIs provide isolation and pluggable network plugins. Popular CNIs like Calico, Flannel, and Cilium integrate well. Advanced features like service discovery and network load balancing offer enhanced capabilities. Troubleshooting and monitoring are essential for a healthy networking environment. By following best practices, robust networking solutions can be built in Kubernetes.